|

Ji Zhang I am Ji Zhang (张继), an Assistant Professor in the School of Computing and Artificial Intelligence at Southwest Jiaotong University (SWJTU). I received my Ph.D. degree in 2024 from the School of Computer Science and Engineering, University of Electronic Science and Technology of China (UESTC), where I was very fortunate to be advised by Prof. Jingkuan Song and Prof. Lianli Gao. My research interests include Robotics, Computer Vision and Few-shot Learning. I am particularly interested in designing advanced algorithms that exploit robotic foundation models (i.e., vision-language-action models (VLAs)) to tackle challenging real-world robotic applications. If you are also interested in related topics, please do not hesitate to reach out. |

|

News

[06/2025] Our paper about few-shot learning was accepted by TPAMI. |

|

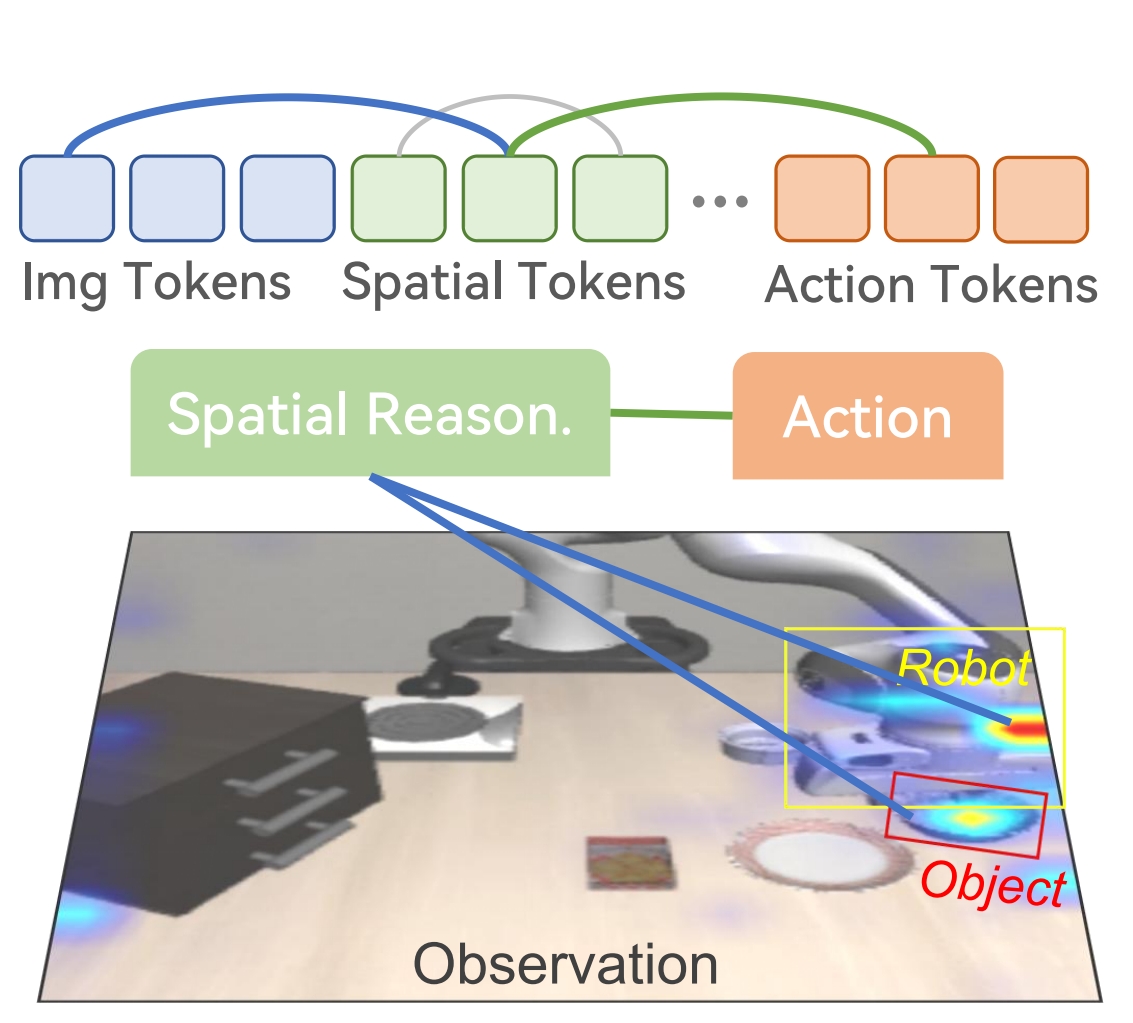

InSpire: Vision-Language-Action Models with Intrinsic Spatial Reasoning

Ji Zhang*, Shihan Wu*, Xu Luo, Hao Wu, Lianli Gao, Heng Tao Shen, Jingkuan Song arXiv, 2025 [Paper][Code][Project] Mitigating the adverse effects of spurious correlations by boosting the spatial reasoning ability of vision-language-action (VLA) models. |

|

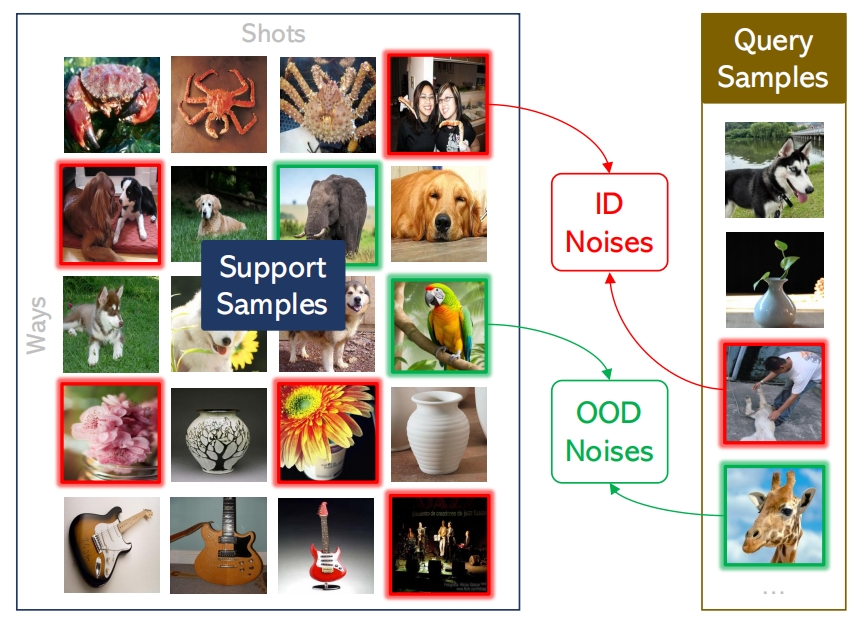

Reliable Few-shot Learning under Dual Noises

Ji Zhang, Jingkuan Song, Lianli Gao, Nicu Sebe, Heng Tao Shen IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2025 [Paper][Code] Addressing both ID and OOD noise from support and query samples of few-shot learning tasks within a unified framework. |

|

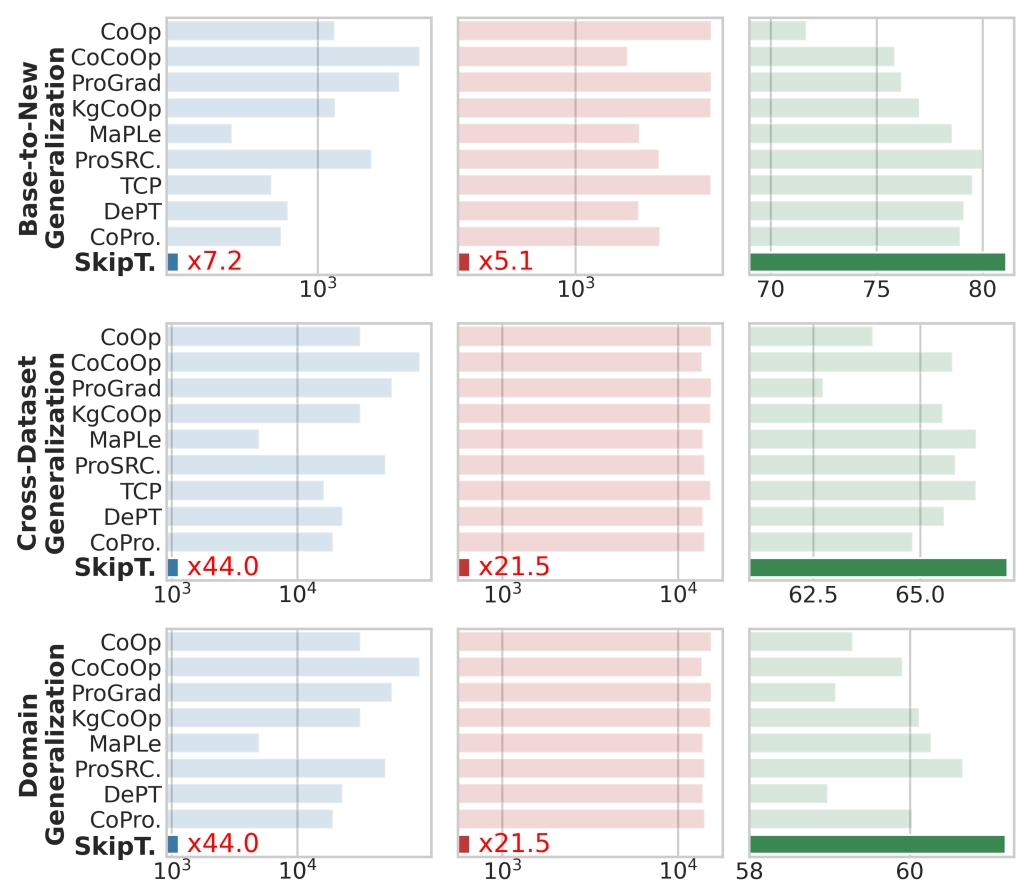

Skip Tuning: Pre-trained Vision-Language Models are Effective and Efficient Adapters Themselves

Shihan Wu, Ji Zhang#, Pengpeng Zeng, Lianli Gao, Jingkuan Song, Heng Tao Shen IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2025 [Paper][Code] Achieving effective and efficient adaptation of large pre-trained vision-language models. |

|

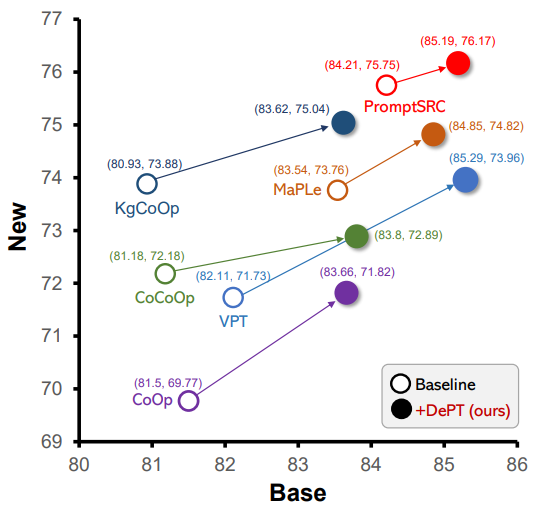

DePT: Decoupled Prompt Tuning

Ji Zhang*, Shihan Wu*, Lianli Gao, Heng Tao Shen, Jingkuan Song IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2024 [Paper][Code] Overcoming the base-new tradeofff (BNT) problem for existing prompt tuning methods. |

|

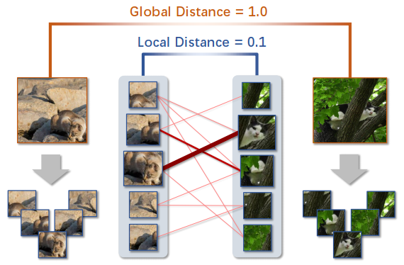

From Global to Local: Multi-scale Out-of-distribution Detection

Ji Zhang, Lianli Gao, Bingguang Hao, Hao Huang, Jingkuan Song, Heng Tao Shen IEEE Transactions on Image Procesing (TIP), 2023 [Paper][Code] Leveraging both global visual information and local region details of images to maximally benefit OOD detection. |

|

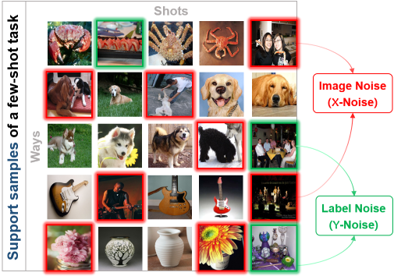

DETA: Denoised Task Adaptation for Few-shot Learning

Ji Zhang, Lianli Gao, Xu Luo, Heng Tao Shen, Jingkuan Song IEEE International Conference on Computer Vision (ICCV), 2023 [Paper][Code] Tacking both the X-noise (i.e., image noise) and the Y-noise (i.e., label noise) in a unified framework for test-time few-shot tasks. |

|

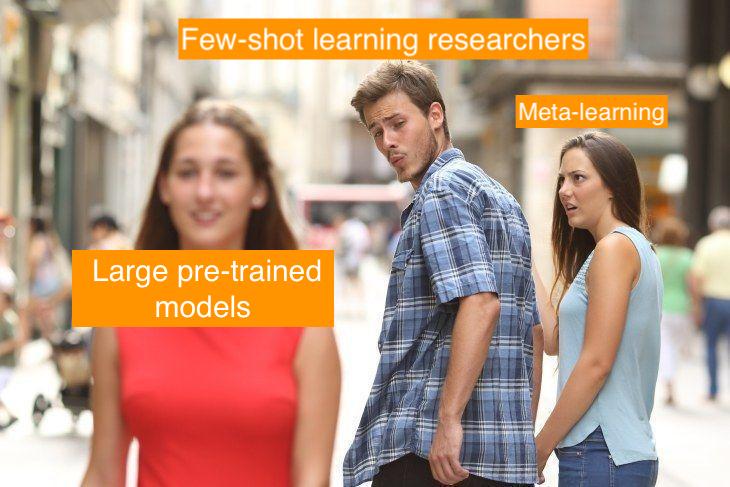

A Closer Look at Few-shot Classification Again

Xu Luo*, Hao Wu*, Ji Zhang, Lianli Gao, Jing Xu, Jingkuan Song International Conference on Machine Learning (ICML), 2023 [Paper][Code] Empirically proving the disentanglement of training and test-time adaptation algorithms in few-shot classification. |

|

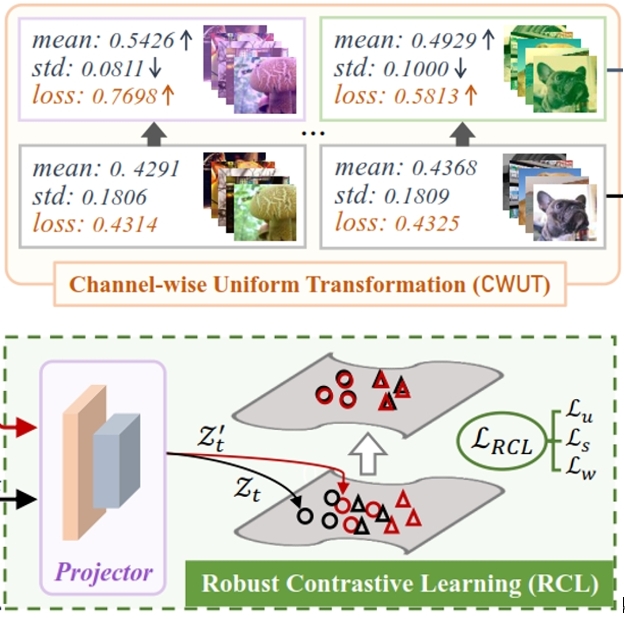

Free-lunch for Cross-domain Few-shot learning: Style-aware Episodic Training with Robust Contrastive Learning

Ji Zhang, Jingkuan Song, Lianli Gao, Heng Tao Shen ACM International Conference on Multimedia (ACM MM), 2022 [Paper][Code] Addressing the side-effect of style-shift between tasks from source and target domains. |

|

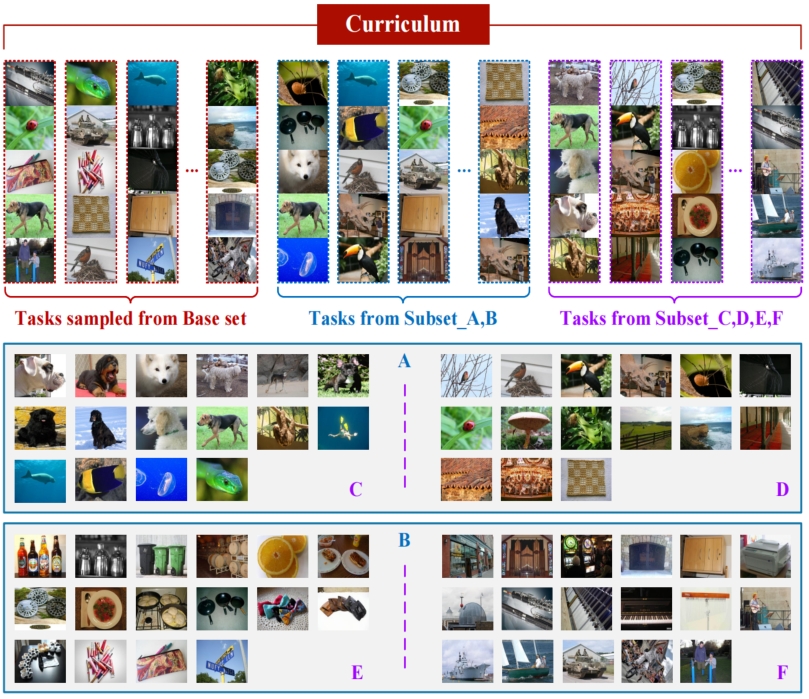

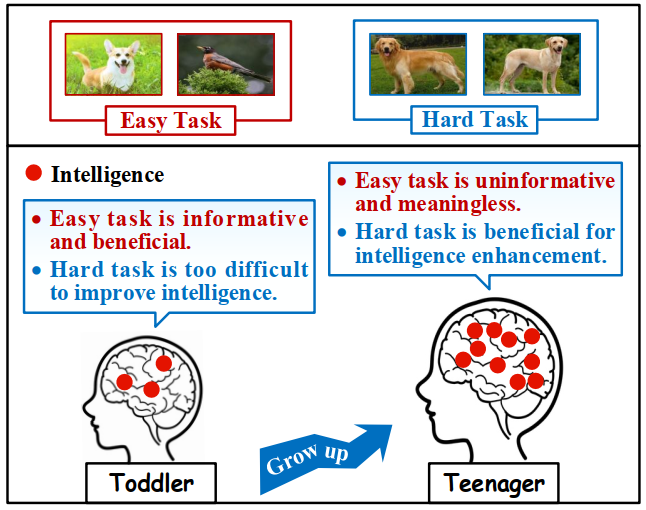

Progressive Meta-learning with Curriculum

Ji Zhang, Jingkuan Song, Lianli Gao, Ye Liu, Heng Tao Shen IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), 2022 [Paper][Code] An extended version the ACM MM'21 paper, where the curriculum is effectively integrated as a regularization term into the objective so that the meta-learner can measure the hardness of tasks adaptively. |

|

Curriculum-based Meta-learning

Ji Zhang, Jingkuan Song, Yazhou Yao, Lianli Gao ACM International Conference on Multimedia (ACM MM), 2021 [Paper][Code] Progressively improving the meta-learner by performing episodic training on simulating tasks from easy to hard, i.e., in a curriculum learning manner. |

Academic Service

| I'm a reviewer of several top journals/conferences, e.g. CVPR, ICCV, NeurIPS, ICLR, ACM MM, AAAI, IEEE TPAMI, IEEE TIP. |

|

This well-designed template is borrowed from Jonbarron. |